For chip makers, the pressure has been to produce smaller, more powerful components for each generation of phones. Denser, faster, cheaper — these mantras have driven our industry for as long as most people can remember.

But there’s a new game in town. The smartphone era is not over, but the growth rate is slowing. The key growth driver in hardware could soon be the Internet of Things. Over the next decade, this industry will churn out tens of billions of connected sensor devices. These will be used in every corner of the world — from highways to arteries — to gather new insights to help us live and work better.

This chapter will reshape the technology hardware industry in profound ways, and even reverse many of the changes brought about by the smartphone era. To understand how profound this shift could be, it’s important to know how past markets have shaped the way computers are built.

It started with the circuit board

Just a few short decades ago, computers filled entire rooms. In these early days, manufacturers produced each component separately and wired them together on a circuit board. You’d have memory in one part of the board, logic processing on another side, maybe a radio in the corner. Wires or copper traces connected each piece, and components could be easily added or removed from the system.

The “System on a Board” configuration worked for a while. But then computers began to shrink as scientists engineered smaller and smaller transistors. Transistors are like electric switches — the fundamental building blocks of modern computing.

In 1965, Gordon Moore, the founder of Intel, made a famous prediction (misleadingly labeled a “law”): Every 18-24 months, engineers would fit about twice as many transistors on a particular piece of silicon. Computer components started shrinking fast, and suddenly a lot of free space opened up on circuit boards.

The master chip

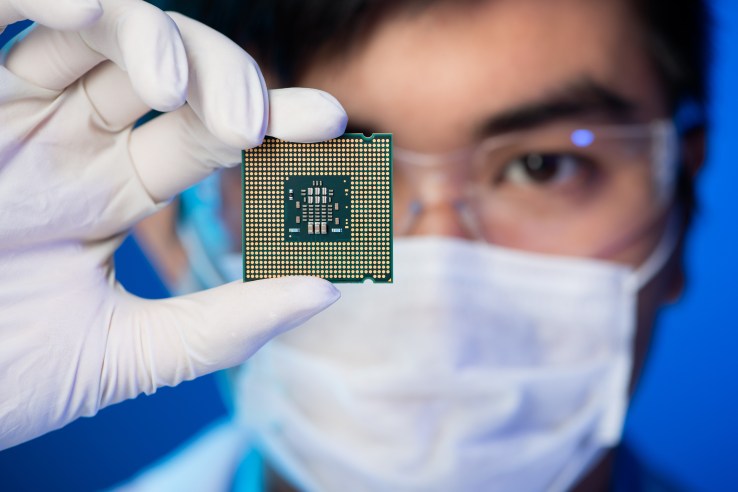

Engineers soon began to experiment with putting multiple functions on a single piece of silicon. Before long, they could get a whole computer onto that one piece of silicon, wrap it up nicely and market it as a single, all-inclusive package.

We call this “System on a Chip” (SoC). You probably have one in your smartphone. This tight integration of components carries some big advantages. With components packed closer together, signals can travel between them more quickly, which can increase processing speed.

SoCs are frequently cheaper too; instead of testing many components independently, you could run one set of tests on a single chip. And, of course, size matters. The consolidated package helped manufacturers like Apple and Samsung produce new generations of lighter, sleeker devices.

But there’s a big drawback. SoCs are manufactured on common process platforms in large manufacturing facilities called “fabs.” These mega-factories are able to produce hundreds of millions of chips per month.

Denser, faster, cheaper — these mantras have driven our industry for as long as most people can remember.

The challenge in the SoC paradigm is that all the components in a single chip (processor, radio, memory, etc.) are locked into a single manufacturing process, which does not always provide the “best in class” for each component. For example, one process platform may be excellent for processors, but just mediocre for embedded flash memory. And it’s difficult to upgrade or switch out components individually without upgrading the entire fab.

For smartphones, and many other applications, the benefits of an integrated SoC generally outweigh this drawback. However, the emergence of a new hardware era introduces a new set of challenges for chip makers.

New rules in the era of “things”

Let’s look at the Internet of Things. This is the hardware industry of the future, and it will run on billions of sensor devices. But the problem is, these devices will exist in all sorts of environments. Some will live in factories; some will be outside; some will collect data underwater. The basic functionality of these smart nodes is very similar (sense data, collect data, store data, communicate data); however, the deployment requirements vary greatly.

A sensor node in a car engine, for example, will need to withstand high temperatures. Sensor nodes spread across farmland might require powerful radio components to send data over long distances. Most sensors will need to operate at very low power consumption (because they won’t be plugged in), but for some, this will be even more important than others.

Even more confusing, at this point we simply don’t know the exact requirements for most IoT applications. It’s just too early in the process. But we have to start building hardware for it anyway! This presents all kinds of challenges to existing models of chip production.

Watch for disintegration

The PC and smartphone industries were able to deploy the same chip designs for hundreds of millions of units. The giant integrated SoC fabs were well-suited to this. But IoT is different; it will likely consist of thousands of one-million-unit applications. This would suggest the need for a much greater diversity of chip configurations than we’ve seen to date.

As a result, other models for constructing chips are emerging. Some are calling the developments multi-chip modules, or 2.5D, or System in a Package (SiP). All involve packing components closely together, without the complete, end-to-end integration of SoC. The equations governing cost, performance and power consumption of these approaches are beginning to tilt the balance away from SoC as the favored choice for IoT smart nodes.

In some ways, the great trends of the PC and smartphone eras were toward standardization of devices. Apple’s great vision was understanding that people prefer a beautiful, integrated package, and don’t need many choices in hardware. But in software it’s generally the opposite. People have different needs, and want to select the apps and programs that work best for them.

In a smart, connected world, sensor requirements could vary greatly from factory to factory, not to mention between industries as varied as agriculture, urban planning and automotive. Just like smartphone owners like to pick and choose which apps they want, IoT manufacturers may want to shop for components individually without being locked into a single fab.

It’s hard to overstate how fundamental this shift could be. The $300+ billion semiconductor industry has grown up around the standardized hardware of PCs and smartphones — basically, boxes that live indoors and plug into walls. IoT, on the other hand, will require a huge diversity of hardware offerings. Get ready for some big changes in the “silicon” of Silicon Valley.

Posted by Narbeh Derhacobian